Mobile Autonomous RobotsJason Prideaux Intro

to Artificial Intelligence Final Project CIS 571 Professor Art Farley

|

||||||||||||||||||||||||

| [Overview] [The Architecture] [How They Work] [Uses] [Space Exploration] [Experiment] [References] | ||||||||||||||||||||||||

OverviewBy its definition, a mobile autonomous a robot is an independent mechanical device, free of external control that is able to move in its environment. Autonomous robots are architected just like regular software agents, but their sensors can perceive data from the real world, such as temperature, vision, location, and speed. Likewise, their actuators can affect the real world, such as motors to make the robot move, or other physical devices that can manipulate the world around them. Thus, a robot’s environment is not just some programmed world, but is the real, physical world around them. There are many types of robots that represent varying levels of sophistication. First, there are robots that are remotely controlled, meaning that they had no intelligence and are not autonomous. Next, the simplest autonomous robots are manipulator robots, which are generally used for very specific tasks such as automobile assembly. Then there are mobile autonomous robots, which will be the focus of this paper. Mobile robots are robots that can move in their environment, such as rovers, airplanes, and submersibles. Thus, mobile autonomous robots add the ability of intelligence, so that the robot itself can decide how to move in its environment. Lastly, there are hybrid robots, which are mobile robots with manipulators, such as humanoid robots. |

||||||||||||||||||||||||

The Architecture of a Basic Autonomous RobotBefore moving on, we will now take a look at how the software architecture of an autonomous robot works. The architecture of an autonomous robot is very similar to that of a typical software agent. Software agents are really the start of developing an autonomous system for a robot. Figure 1 is a diagram depicting the high-level architecture for an autonomous system. It is exactly the same as a typical software agent, except that it senses and actuates the real world. In addition, the sensors and actuators are more than just programming constructs. They are actual physical devices attach to the robot as noted earlier.

|

||||||||||||||||||||||||

Mobile Robots and How They WorkMobile autonomous robots

have the ability to move around their environment based on their own

decisions. The specific

environments are typically land, in the air, and in water.

The types of mobile robots for each type of common environment are

namely unmanned land vehicles (ULV), unmanned air vehicles (UAV), and

autonomous underwater vehicles (AUV).

Lastly, there are AUVs, which make up a smaller segment of mobile robots. Much like other unmanned vehicles, actuators control the movement of the AUV, while sensors are used to gather information. So far most AUVs built thus far have been submersibles designed for exploring, thus the primary sensors are typically sonar, since GPS and vision are of little use in deep water. |

||||||||||||||||||||||||

Future and Present Uses of Mobile Autonomous Robots

The Southampton Oceanography Centre has built an AUV called Autosub seen in Figure 4 [8]. Autosub has been used to explore various areas of the ocean, map ocean wave turbulence, and study ice shelves. In all, Autosub has accomplished over 250 missions, providing oceanographers with valuable scientific data that would have been impractical for humans to collect. The government and military are also interested in mobile autonomous robots. Looking to take humans out of harm's way, the Air Force has begun using a UAV called Global Hawk. “In 1999 a Global Hawk became the first computer-operated unmanned plane to fly itself across the Pacific, 23 hours to Australia, where it landed itself dead on the center line [4].” Currently, using optical and infrared sensors the plane is able to fly over dangerous zones, and then transmit its data to the soldiers on the ground.

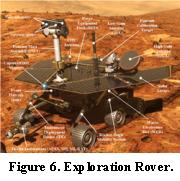

Another ULV in actual use is the space exploration vehicle, Sojourner [7].

Sojourner, which explored the planet Mars in 1997, is a rover-based

robot. It used vision sensors

for sensory input and 6 wheels to traverse a small part of Mars.

It was able to take pictures and soil samples, and then it

transmitted the data back to earth for nearly a month. Future Mobile Robots Some practical applications that mobile robots could be used for in the future include: · Search and Rescue – UAVs can quickly search areas. Their vision sensors can find targets that humans might miss. In addition, robots are disposable in dangerous situations. · Surveillance – UAVs and ULVs can patrol as security to quickly identify mischievous activity. ·

Maintenance and Inspection – UAVs and AUVs can be

used to inspect and even repair dams, bridges, tall buildings, ship hulls,

etc. The future of mobile autonomous robots promises even more intriguing uses of robots when it comes to space exploration. NASA is working on both AUVs and UAVs to send to the moons of Saturn and Jupiter [1]. They have plans for an AUV to search for life beneath the ice surface of Europa, a moon of Jupiter. There are also ideas for a UAV to explore the atmosphere and image the land of Titan, a moon of Saturn. In addition, NASA is sending a new ULV to Mars in Spring 2003 that is an updated version of the 1997 Sojourner rover [3]. |

||||||||||||||||||||||||

Autonomous Robot Space ExplorationMany feel that the future of space exploration lies in the hands of autonomous robots. To explore beyond our planet requires the use of robots due to the vast distances in space. For instance the time to travel to Mars in the Pathfinder/Sojourner mission was 211 days. In the past spacecrafts were controlled by people on earth making decisions for the robot. However, this severely limited the robots ability to react to unexpected situations. In addition, the spacecraft only works if it can communicate with Earth, so communication interruptions can literally cause a spacecraft to cease to operate. Instead, NASA relies on the Remote Agent, which is their name for the project that involves an autonomous software agent controlling the spacecraft [2]. All future spacecraft missions will contain some form of a remote agent, thus making future spacecraft mobile autonomous robots. The remote agent controlling the autonomous spacecraft will be able to make decisions based on programmed goals. In addition, the “remote agent increases the reliability of the health of the spacecraft by enabling the spacecraft to fix itself autonomously [2].” Remote agents are also designed to have flexible goal based plans that will be used to make decisions. The goals help the agent to determine what it should do in any given situation, while the term flexible implies that the goals are not specific, and that how the goals are accomplished is left up to the agent. This seems to be a logical approach, since an observed problem is that we on Earth cannot predict all the possible situations a spacecraft might encounter.

A scientist, Ayanna Howard, is a leading AI researcher for NASA’s Jet Propulsion Laboratory (JPL). She states that, “nearly all of today’s robotic space probes are inflexible in how they respond to the challenges they encounter. We want to tell the robot to think about any obstacle it encounters just as an astronaut in the same situation would do [5].” In order to achieve this goal JPL is employing fuzzy logic and neural networks. Fuzzy logic allows the agent to think “not only in terms of black and white, but shade of gray [5].” The neural networks will allow for the abilities of learning and association for the AI agent. NASA is also interested in sending autonomous robots to Venus, Jupiter, and Saturn. However, clouds cover Venus and its surface is unknown. Jupiter and Saturn are both gas planets, but their moons do have surfaces. Many problems arise such as how to explore a planet with no surface, and where clouds block solar energy. Thus, NASA is interested in UAVs that would require very low energy. JPL has started an Aerobot program, which is concerned with building dirigible type spacecrafts to explore the outer planets [10]. Much like any other agent controlled spacecraft, these autonomous robots, would fly into unknown environments with a main goal of gathering information about their environment. |

||||||||||||||||||||||||

Experiment: Mobile Autonomous Robots in a Simulation EnvironmentWith the prohibitive costs of building autonomous robots, many people have chosen to test out new ideas and techniques in simulators. Hardware costs for building complex robots are often in the thousands and even tens of thousands of dollars. However, simulators allow researchers to test out the most important functions of a robot, which are its brain/intelligence. The brain of a robot is literally a software program that receives input and directs output. As discussed earlier, this program is usually architected much like software agents, which are seen throughout many applications today from games to word processing assistants. Many simulators in use can imitate a real environment, by sending data to an autonomous robot. The autonomous robot thinks it is receiving data from the real world, such as vision or temperature. Next, the robot behaves just like it would in the real world, but its output is routed to the simulator instead of some attached physical hardware. Simulators are even useful when researchers have the robot hardware because they are able to interface the hardware with the simulator, so as to test the hardware with situations that would be difficult to produce in the real world. One such simulator, PlayerStage, was built for just this purpose [11]. For the experiment, I chose the iSIM simulation environment [12]. ISIM was originally built to serve as a way to test wearable GPS and wireless networking applications. The applications, designed to run on separate wearable computers, can actually be run on one desktop computer. The iSIM application is then able to send data such as location, time and temperature to the wearable applications. Thus, the applications think they are receiving real world data. Also the simulator mimics an adhoc wireless network for the wearable devices, so that they can communicate. The way it works, is that a user can add people to the simulator, and each person corresponds to a wearable application. When the user moves a person in the simulator, the simulator notifies the wearable application just like a real GPS. The similarities between iSIM and a robot simulator become quite clear. Currently, applications think they are receiving real world data from iSIM, much like how robot software thinks it is receiving real sensory data from a robot simulator. The main difference is that agents in iSIM are user controlled, meaning that the simulation user controls the agent. For the experiment, I decided to add intelligence into iSIM. So, for my first step I decided to extend the program to create autonomous agents: agents that would move in the simulator on their own. The first step was fairly

trivial to accomplish, and the result was agents randomly walking around

in the simulator with no purpose. Next,

I created functional mobile autonomous robots called Searchers and

Rescuers. Autonomous robots,

as discussed in paper, have sensors, actuators, goals, and reasoning.

A class was created so that the robots would interface with this

class for sensing and actuating. Next,

both Searchers and Rescuers were given some fairly simple states and goals

that correspond to their names. Lastly,

some simple reasoning gave them the ability to reach their goals based on

their current state and sensory input. Please go to http://www.cs.uoregon.edu/~jprideau/iSIM/autonomous.html for more information on the robot experiment, how they were created, and how it all works. A brief explanation and scenario is given. In addition, you can also download the iSIM program with the autonomous robots. |

||||||||||||||||||||||||

References

|

UAVs

often mimic regular air vehicles but on a different scale.

UAVs

often mimic regular air vehicles but on a different scale. Current

Mobile Robots

Current

Mobile Robots A

company called Irisbus has started to build buses that have an autopilot

function in Figure 5.

A

company called Irisbus has started to build buses that have an autopilot

function in Figure 5. NASA

has employed the remote agent concept in a rover that is going to Mars in

Spring 2003.

NASA

has employed the remote agent concept in a rover that is going to Mars in

Spring 2003.